Evaluation of DeepSeek-R1 and ChatGPT-4o on the Chinese national medical licensing examination: a multi-year comparative study

Appendix Tables 1 and 2 show the accuracy of ChatGPT-4o and Deepseek-R1 on NMLE questions. The data is grouped by unit and year from 2017 to 2021. Deepseek-R1 gets higher accuracy than ChatGPT-4o. Its scores are especially high in 2020 and 2021. In 2017, ChatGPT-4o’s accuracy is lower. For example, Unit 3 is 46.00% and the average is 52.33%. In the same year, Deepseek-R1 gets 90.00% in Unit 1. It keeps high scores in other years too. In 2020, Unit 2 reaches 96.67%. Deepseek-R1 has stable performance in each year. ChatGPT-4o shows more changes in its scores.

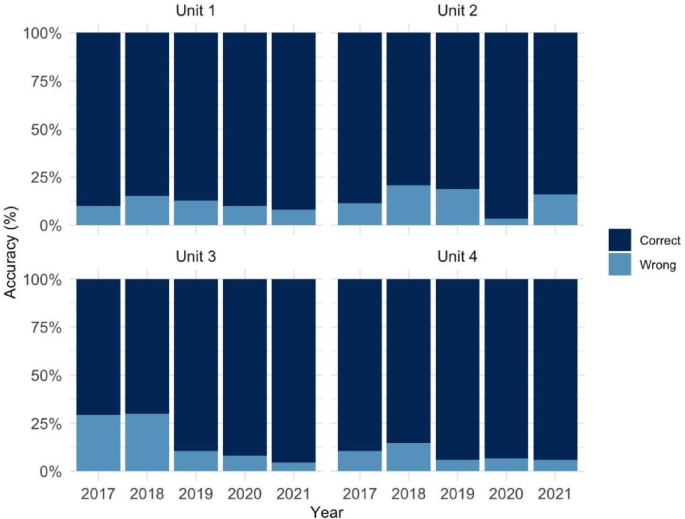

The detailed performance data is summarized visually in Fig. 3. (Appendix Tables 1 and 2, which provide detailed unit-by-unit accuracy, are available in the Supplementary Appendix).

A two-way analysis of variance (ANOVA) was conducted to assess the effects of model (ChatGPT-4o vs. DeepSeek-R1) and year (2017–2021) on answer accuracy.

The results revealed significant main effects of model (F(1,26) = 1653.64, p < 0.001, η2 = 0.98) and year (F(4,26) = 19.05, p < 0.001, η2 = 0.42), as well as a moderate model–year interaction (F(4,26) = 5.77, p = 0.024, η2 = 0.18).

These results confirm that model type plays a dominant role in performance, with DeepSeek-R1 consistently outperforming ChatGPT-4o across all years and subject areas.

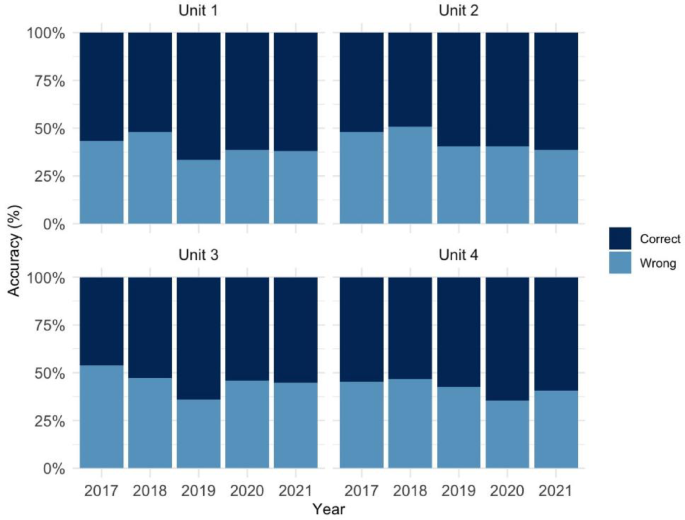

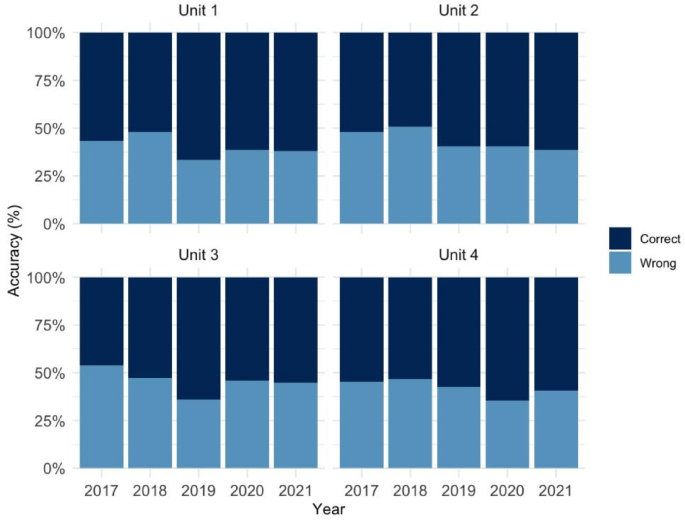

Figure 1 presents the annual performance of ChatGPT-4o across four subject units, highlighting considerable year-to-year variability, especially in Unit 3 during earlier years. Figure 2 shows Deepseek-R1’s performance under the same conditions, where the model consistently maintained high accuracy across all units and years. Figure 3 provides a direct comparison of the average annual accuracy between the two models, clearly illustrating the performance gap, with Deepseek-R1 demonstrating stronger and more stable outcomes over time.

Accuracy of ChatGPT-4o across four clinical units from 2017 to 2021.

Accuracy of Deepseek-R1 across four clinical units from 2017 to 2021.

Average accuracy (%) of ChatGPT-4o and DeepSeek-R1 from 2017 to 2021.

As shown in Fig. 1, ChatGPT-4o’s accuracy varied across clinical units and years, with Units 1 and 3 exhibiting relatively lower proportions of correct answers compared to Units 2 and 4. Notably, Unit 1 showed a visible drop in accuracy from 2017 to 2019, followed by a moderate recovery. The results suggest unit-specific challenges in question answering, highlighting potential differences in content complexity or model limitations in certain domains.

In Fig. 2, Deepseek-R1 achieved consistently high accuracy across all four clinical units and years, with most units showing over 85% correct responses throughout the evaluation period. Compared to ChatGPT-4o (Fig. 1), Deepseek-R1 demonstrated significantly lower error rates, especially in Unit 1 and Unit 3, suggesting improved robustness in domains previously identified as challenging.

Figure 3 summarizes the overall performance comparison between ChatGPT-4o and DeepSeek-R1 from 2017 to 2021. DeepSeek-R1 consistently outperformed ChatGPT-4o across all years, achieving high average accuracy (approaching 95%) with minimal variance. In contrast, ChatGPT-4o’s performance fluctuated around 55–65%, with relatively larger standard deviations. These findings corroborate the trends observed in Figs. 1 and 2, highlighting the superior consistency and generalization capacity of DeepSeek-R1 across multiple clinical domains and years.

Generalized linear mixed model (GLMM) analysis

This table presents fixed-effect estimates from the generalized linear mixed model (GLMM) assessing the impact of model type (ChatGPT-4o vs. DeepSeek-R1), exam year, and subject unit on the probability of correctly answering Chinese NMLE questions. DeepSeek-R1 significantly outperformed ChatGPT-4o overall (β = − 1.829, p < 0.001). Compared to the 2017 baseline, ChatGPT-4o’s performance decreased significantly in 2019–2021 (p < 0.05) (see Table 1 for detailed GLMM results).

Among subject units, Unit 3 showed the most favorable outcome (β = 0.344, p = 0.001). A significant interaction was observed in 2020 (p = 0.009), indicating an amplified performance gap between the two models that year. All estimates are based on log-odds; negative coefficients indicate higher predicted accuracy. Compared to Unit 1 (reference), Unit 3 showed a significantly higher predicted accuracy (β = 0.344, p = 0.001), indicating the best overall performance among subject units.

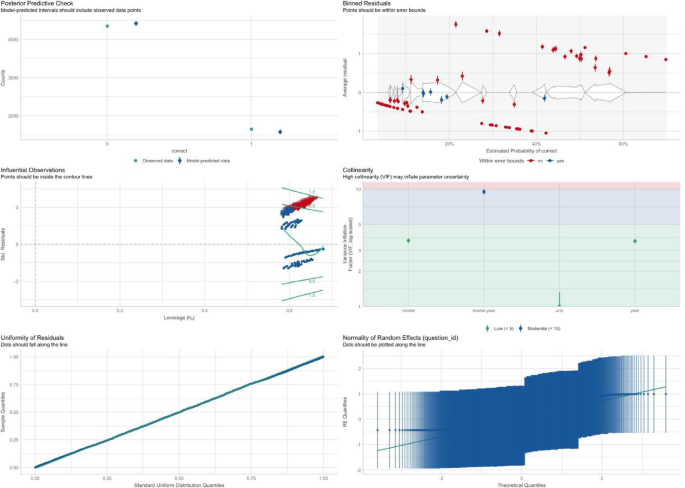

To assess the robustness of the GLMM specified as correct ~ model * year + unit + (1 | question_id), we conducted a series of diagnostic checks. As shown in Fig. 4, the model fit is statistically valid and interpretable.

Generalized linear mixed model (GLMM) diagnostics.

Posterior predictive checks indicate that the predicted probability intervals closely align with the observed distribution of correct responses, suggesting the model effectively captures key structural variations. Binned residual plots further confirm a good fit: most binned averages lie within the expected confidence bands, with only slight deviation at higher predicted probabilities.

Residual distributions approximate uniformity, with no evidence of overdispersion or influential outliers. All fixed-effect terms present acceptable multicollinearity levels, with Variance Inflation Factors (VIFs) consistently below the critical threshold of 10. The random intercepts associated with question_id display an approximately normal distribution, validating the assumption of normally distributed random effects.

Together, these diagnostics support the appropriateness of the GLMM specification and lend confidence to subsequent statistical inferences regarding the effects of model type, year, and unit on prediction accuracy.

In the Influential Observation Figure, the blue points represent the model’s predicted results, while the green points represent the actual observed data. The closeness between the green and blue points reflects the accuracy of the model’s predictions. In most cases, DeepSeek-R1 and ChatGPT-4o show a close match between the predicted and actual data, especially in fact-based recall questions. However, there are some data points that exhibit significant deviations, which are identified as outliers. These deviations often occur in multi-step reasoning questions (such as diagnostic reasoning tasks), indicating errors in the model’s reasoning that lead to discrepancies in its predictions.

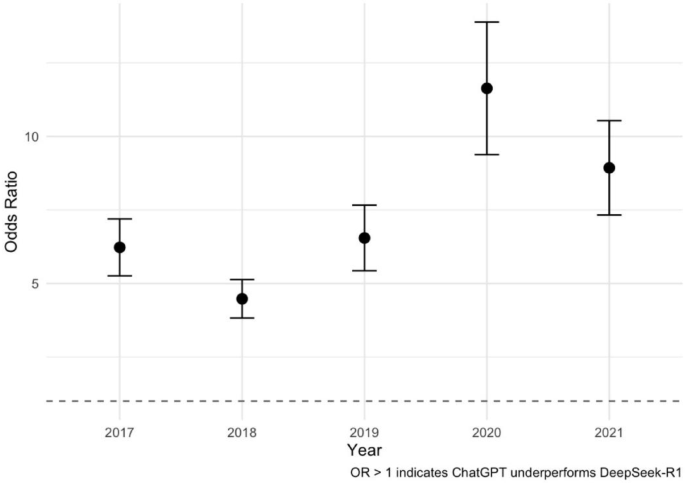

Odds ratios (ORs) were estimated using a generalized linear mixed model (GLMM) with a logit link, controlling for year and subject effects and including question ID as a random intercept. Values greater than 1 indicate a significantly higher likelihood of incorrect responses from ChatGPT-4o relative to DeepSeek-R1. Across all five years (2017–2021), DeepSeek-R1 consistently and significantly outperformed ChatGPT-4o (all p < 0.0001). The largest performance disparity occurred in 2020 (OR = 11.63), suggesting that ChatGPT-4o was over 11 times more likely to generate an incorrect answer compared to DeepSeek-R1. This finding aligns with observed trends in model stability and reasoning depth and supports the superior diagnostic inference capabilities of reinforcement-tuned models like DeepSeek-R1 (see Table 2 for pairwise odds ratios across years).

As shown in Fig. 5, each point represents the estimated odds ratio for a given year, with vertical lines showing 95% confidence intervals based on a generalized linear mixed model (GLMM) with a logit link. ORs greater than 1 suggest that ChatGPT-4o was more likely to answer incorrectly than DeepSeek-R1. In all five years, the ORs remained significantly above 1, indicating consistent underperformance of ChatGPT-4o relative to DeepSeek-R1. The disparity peaked in 2020 and remained high in 2021, reflecting a widening performance gap.

Odds ratio trends indicating inferior performance of ChatGPT-4o Relative to DeepSeek-R1.

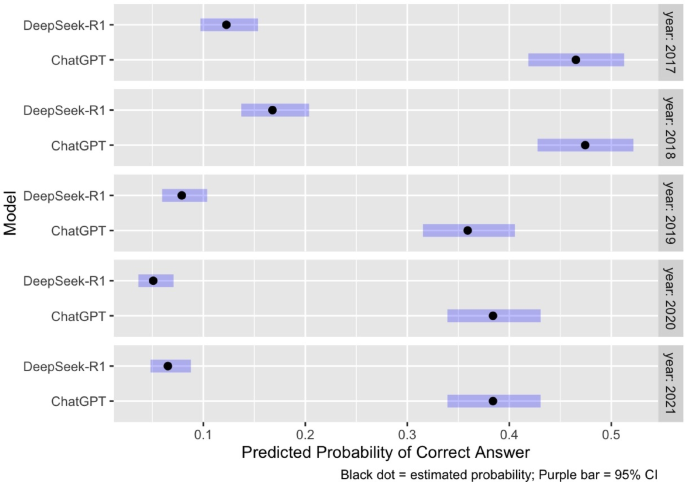

According to Fig. 6, we can see the predicted probabilities of correct answers for ChatGPT-4o and DeepSeek-R1 across 2017–2021, as estimated by the GLMM. Each black dot corresponds to the model’s mean predicted accuracy for that year, while the purple horizontal bars represent 95% confidence intervals. DeepSeek-R1 consistently shows higher predicted accuracy than ChatGPT-4o in all years.

GLMM-estimated accuracy of ChatGPT-4o and DeepSeek-R1.

The largest performance gap appears in 2020, where DeepSeek-R1 reaches its peak predicted probability while ChatGPT-4o remains substantially lower. ChatGPT-4o demonstrates moderate inter—year variability, while DeepSeek-R1 maintains a more stable high performance. The non-overlapping confidence intervals in most years indicate that the performance difference between the two models is statistically significant.

Model reasoning logic comparison

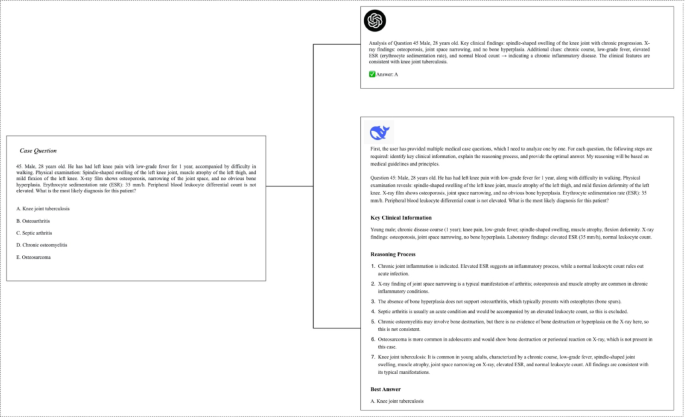

In Fig. 7, we selected a representative diagnostic analysis question to demonstrate the differing reasoning approaches of ChatGPT-4o and DeepSeek-R1 by using Step-by-step reasoning prompt (Prompt 3). This case is a typical “multidisciplinary knowledge integration” question, which involves three core medical disciplines in the NMLE: diagnostics (fundamental supporting discipline), surgical sciences—orthopedics (core clinical discipline), and internal medicine—infectious diseases and rheumatology (supplementary disciplines). The primary objective of this question is to assess the practitioner’s ability to integrate clinical information into an accurate diagnosis, which is one of the essential foundational skills in clinical practice.

Diagnostic analysis example.

Through the comparison of diagnostic reasoning paths between DeepSeek-R1 and ChatGPT-4o, we observe the following:

ChatGPT-4o’s Diagnostic Pathway:

Step 1: Identifying Key Clinical Information: ChatGPT-4o recognizes the patient’s chronic knee pain, low-grade fever, and relevant physical findings (e.g., muscle atrophy, spindle-shaped knee swelling).

Step 2: Considering Multiple Possible Diagnoses: The model considers differential diagnoses such as osteoarthritis, septic arthritis, and chronic osteomyelitis, but lacks detailed reasoning about the absence of bone destruction or hyperplasia, which is important in ruling out osteosarcoma.

Step 3: Reasoning Process: ChatGPT-4o focuses on broad symptom categories like inflammation but doesn’t consider the complexity of the patient’s clinical history and multi-disciplinary nature of the question.

DeepSeek-R1’s Diagnostic Pathway:

Step 1: Detailed Clinical Analysis: DeepSeek-R1 accurately integrates the patient’s symptoms (chronic course, low-grade fever, spindle-shaped knee swelling) with underlying pathophysiology, associating it with chronic inflammatory conditions, such as tuberculosis.

Step 2: Focused Differential Diagnosis: DeepSeek-R1 emphasizes specific findings, like the absence of bone hyperplasia (ruling out osteoarthritis) and notes that the lack of leukocytosis makes septic arthritis less likely.

Step 3: Reasoning Process: The model correctly identifies that the key clinical information suggests knee joint tuberculosis, a diagnosis supported by the combination of chronic disease progression, non-destructive osteopathy on X-ray, and elevated ESR.

Diagnostic analysis: impact of prompts on error types

While Table 3 demonstrates that a performance gap exists, this section provides a diagnostic analysis of why it exists. We conducted an ablation study on a 200-question subset (randomly sampled from 2021) to test how prompt design influences performance and error types. The 2021 dataset was chosen as it presented significant cross-disciplinary complexity.

Table 3 reveals a critical finding: ChatGPT-4o’s performance is highly sensitive to prompt structure. When forced to use “Step-by-step reasoning” (Prompt 2), its accuracy dramatically increased by 24.5% (from 63.5 to 88.0%). In contrast, DeepSeek-R1’s performance, already high, saw only a minor 2.5% gain.

To diagnose the cause of this discrepancy, we analyzed a representative sample of errors from this subset, classifying them according to our methodology (Table 4).

In Table 4, it is evident that the accuracy of DeepSeek-R1 across different prompt types shows minimal variation. Specifically, the accuracy for the Baseline prompt is 93.5%, while Prompt 2 and Prompt 3 result in accuracy rates of 96% and 95.5%, respectively. This suggests that, despite the use of more detailed reasoning or concise answer prompts, the performance of DeepSeek-R1 does not differ significantly across these conditions.

Similarly, ChatGPT-4o shows a similar trend, with Prompt 2 achieving an accuracy of 88%, and Prompt 3 yielding an accuracy of 77.5%. While the detailed reasoning prompt (Prompt 2) slightly improves accuracy, the overall difference remains relatively small.

This qualitative analysis provides the diagnostic explanation for the quantitative gap (Table 3). The sample shows that ChatGPT-4o’s baseline errors (e.g., Q14, Q20a, Q53, Q117a, Q117b) are frequently Diagnostic reasoning errors.

Crucially, these are the exact types of errors that are significantly corrected when the model is forced to use the “Step-by-step reasoning” (Prompt 2) prompt. This strongly suggests that ChatGPT-4o’s primary deficit is not a lack of medical knowledge, but rather a failure to spontaneously apply multi-step diagnostic reasoning to complex cases. It defaults to a “fast thinking” mode, leading to reasoning failures.

DeepSeek-R1, conversely, appears to utilize a more robust reasoning pathway by default, aligning with its design. Its baseline performance already reflects this, which is why Prompt 2 offers only marginal improvement. This analysis, therefore, moves beyond a descriptive “what” (DeepSeek-R1 is better) to a diagnostic “why” (because ChatGPT-4o fails to apply reasoning unless explicitly prompted to do so).

link

/https://i.s3.glbimg.com/v1/AUTH_63b422c2caee4269b8b34177e8876b93/internal_photos/bs/2026/4/u/0e7IH5TTOUfF9hDAnG0A/foto21bra-101-inep-a2.jpg)