Development and validation of the clinical educator self-regulated learning scale (CTSRS): insights from faculty development | BMC Medical Education

Study design and setting

A mixed-method, cross-sectional design was employed to develop and validate a scale aimed at evaluating clinical educators’ SRL in the context of teaching. The CTSRS was designed to assess how clinical educators regulate their teaching behaviors, particularly after participating in faculty development programs. The scale development followed a sequential exploratory approach, integrating qualitative interviews to generate items and quantitative validation using factor analysis to confirm the structure. This methodology aligns with best-practice recommendations for educational measurement development, particularly in healthcare setting. The study took place at Chang Gung Memorial Hospital in Taiwan, an 11,225-bed medical centre with seven branches across the country. Ethical approval was obtained from the Chang Gung Medical Foundation Institutional Review Board (IRB: 104-9774B), and all procedures adhered to the Declaration of Helsinki.

Participants

We recruited participants from various medical disciplines, including medical doctors, nurses, and other healthcare professionals. Eligible participants were clinical educators who had completed a structured three-month faculty development program offered at one of four Chang Gung Memorial Hospital branches (Linkou, Keelung, Chiayi, or Kaohsiung). Informed consent was obtained from each participant prior to data collection, and participation was entirely voluntary.

Procedure

The development of the CTSRS incorporated both qualitative and quantitative methods. Following the guidelines for rigorous instrument development in educational research outlined by Artino Jr., La Rochelle, and colleagues [33], the CTSRS was developed and validated in two phases. Phase One involved item generation through in-depth interviews with clinical educators, followed by iterative expert review to refine the item pool. These interviews were guided by Zimmerman’s three-phase model of SRL and aimed to explore educators’ real-world challenges and strategies in regulating their teaching. Phase Two focused on testing the scale’s psychometric properties, including construct validity and reliability, using confirmatory factor analysis with a larger sample.

Phase one: development of the scale

The scale development began with a series of narrative interviews involving 52 clinical educators. Participants had a mean age of 42.6 years and an average of 11.3 years of teaching experience. The sample included 28.8% male (n = 15) and 71.2% female (n = 37). Professional roles were distributed across physicians (n = 18, 34.6%), nurses (n = 20, 38.5%), and other health professionals—including dietitians, radiologic technologists, and pharmacists (n = 14, 26.9%). The interviews centered around their experiences during faculty development and the application of new knowledge and skills in clinical teaching. Additionally, the interviews explored factors that either facilitated or hindered the implementation of training outcomes within their professional practice as trainers, trainees, or training managers. These interviews aimed to gain detailed insights into the self-regulatory practices of clinical educators, their teaching experiences, challenges, and strategies. The interviews were semi-structured and theory-informed, using Zimmerman’s three-phase model of SRL to guide data collection. We created a unique interview specifically for this study, which has not been published previously. An English version of the interview question is available in Supplementary 1. Open-ended questions were used to facilitate in-depth discussions and allow participants to share personal stories reflecting aspects of SRL in clinical settings.

Following these interviews, preliminary findings were reviewed by an expert panel composed of six experienced academics and practitioners in medical education. The panel carefully assessed the content, provided crucial feedback, and suggested modifications to ensure that the scale items accurately reflected the core dimensions of SRL as identified during the interviews. This panel also helped ensure theoretical coherence with Zimmerman’s framework, linking emerging codes to self-monitoring, goal-setting, self-evaluation, and adaptive functioning. This iterative process between narrative collection and expert evaluation played a vital role in refining the scale into a robust tool tailored for assessing self-regulation among clinical educators.

Drawing on Zimmerman’s model of self-regulation and insights from semi-structured interviews, the initial version of the CTSRS was crafted, comprising 78 items distributed across eleven factors. This first iteration of the CTSRS was administered to a purposive sample of 25 clinical educators to identify and refine questions that lacked clarity or relevance. Participants had a mean age of 43.1 years and an average of 12.7 years of teaching experience. Of the sample, 44.0% were male (n = 11) and 56.0% were female (n = 14). Professional roles included physicians (n = 9, 36.0%), nurses (n = 10, 40.0%), and other health professionals such as family physicians, radiologists, and respiratory therapists (n = 6, 24.0%). The criteria for item refinement were meticulously established to ensure the scale’s relevance and precision. Initially, the focus was on clarity and specificity; any item prone to misinterpretation or ambiguity was either modified or omitted. Each item was evaluated using a 5-point Likert scale ranging from one (strongly disagree) to five (strongly agree).

Item selection followed a structured process:

-

1.

Items with a pairwise correlation coefficient above 0.7 were evaluated based on their mean and variance, retaining those with more central means and higher variance;

-

2.

Items that showed negative or cross-loading in the exploratory factor analysis were excluded;

-

3.

Experts assessed each item’s alignment with the theoretical underpinnings of SRL, ensuring that core elements from Zimmerman’s model (e.g., forethought, performance, and self-reflection) were adequately represented.

Redundancy was rigorously addressed; overlapping content led to the consolidation of items, retaining only those that were most comprehensive and representative. Detailed discussions among experts determined which items most clearly and directly measured the intended constructs.

In addition, practical examples drawn from the narrative interviews were used to confirm the contextual appropriateness of items, ensuring ecological validity and relevance to real-world clinical teaching challenges. This iterative process of evaluation and revision effectively reduced the number of items from 78 to 35, enhancing both the scientific rigor and applied utility of the scale.

The refined CTSRS ultimately included 35 items across eight factors:

-

Past Teaching Experiences (3 items),

-

Intrinsic Interest (4 items),

-

Self-Adjustment during the Teaching Process (10 items),

-

Teaching Opportunities (6 items),

-

Resistance to Change in Teaching Methods (2 items),

-

Peer Comparison and Self-Evaluation (3 items),

-

Help-Seeking and Peer Collaboration (3 items),

-

Application of Learning from Faculty Development (4 items).

Descriptions of these factors are presented in Table 1, illustrating the theoretical and empirical foundation of the final model.

Phase two: validation of the CTSRS scale

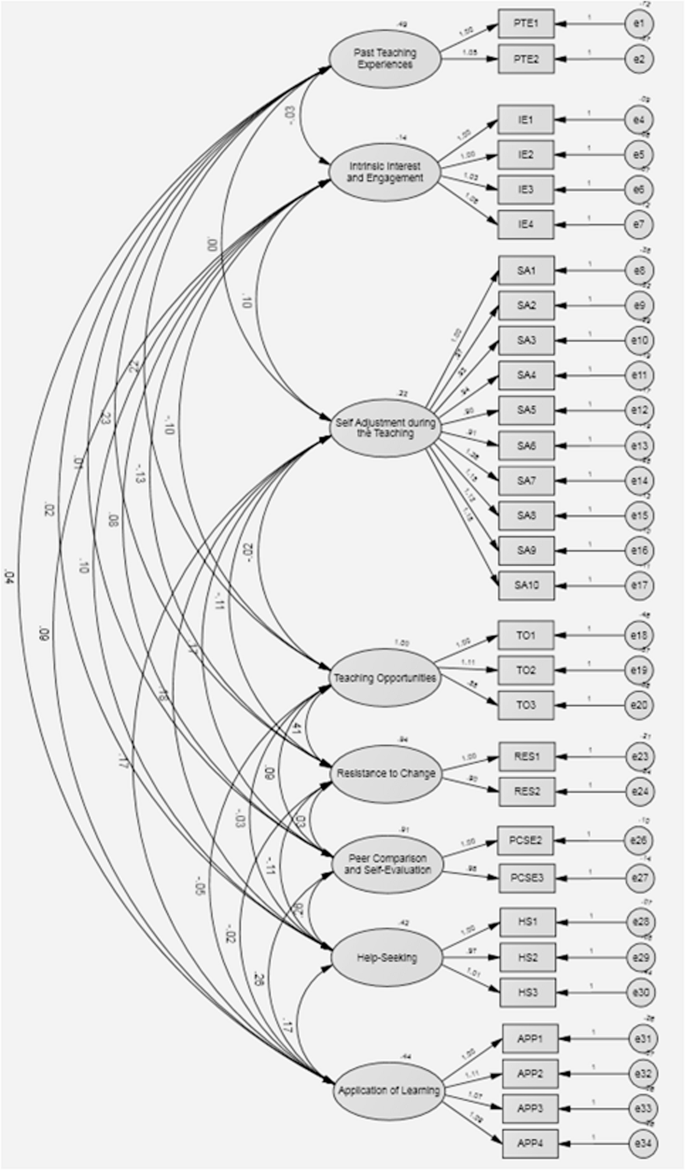

Confirmatory factor analysis (CFA) was employed to validate the hypothesized structure of the CTSRS, which was developed through a theory-informed, mixed-method approach. CFA was selected over exploratory factor analysis (EFA) because the item structure was guided by Zimmerman’s cyclical model of SRL and supported by empirical data from narrative interviews with clinical educators. This a priori conceptualization justified the use of CFA to test the model’s construct validity rather than explore latent structures [34, 35]. Each factor was designed to reflect a specific domain of self-regulation in clinical teaching. For instance, “Self-adjustment during the teaching process” and “Peer comparison and self-evaluation” map onto Zimmerman’s performance and self-reflection phases. The integration of theoretical constructs and qualitative insights ensured that the model was both educationally meaningful and contextually grounded. AMOS version 28.0 was used to conduct CFA [36]. Model fit was evaluated using multiple indices: Chi-square (χ²), Comparative Fit Index (CFI), Tucker-Lewis Index (TLI), Normed Fit Index (NFI), and Root Mean Square Error of Approximation (RMSEA), following accepted thresholds (e.g., CFI > 0.90, RMSEA < 0.08) [37]. Standardized factor loadings were examined for statistical significance, and items with loadings below 0.50 were removed [38]. Prior to CFA, content validity was established through expert panel review. Six medical educators reviewed the draft items for clarity, relevance, and alignment with SRL theory. Although formal computation of content validity indices (CVI) was not performed, items were retained only after consensus approval through iterative refinement. This decision was based on the iterative nature of item refinement, where expert consensus was prioritized through multiple structured feedback rounds rather than a single quantitative CVI rating. The use of consensus panels in medical education instrument development is a recognized alternative for content validation when guided by strong theoretical alignment and context-specific expertise [33].

A total of 378 clinical educators from five academic medical institutions in Taiwan were recruited using purposive sampling. Inclusion criteria required active involvement in clinical teaching and recent completion of a faculty development program. The mean age was 41.3 years, with an average of 12.1 years of teaching experience. The sample included 21.2% male (n = 80) and 78.8% female (n = 298). Professional roles included physicians (n = 107, 28.3%), nurses (n = 139, 36.8%), and other specialists such as those in pediatrics and surgery (n = 132, 34.9%). We acknowledge the non-random nature of the sample and the potential for selection bias, particularly the possibility that participants with higher motivation for faculty development may have been more likely to participate. Additionally, institutional culture may have influenced participant responses. These limitations suggest caution in generalizing results to other populations or systems without further validation.

After the first CFA, six items (Items 3, 8, 17, 18, 19, and 26) were removed due to standardized loadings below the 0.50 threshold. These items were also reviewed for conceptual redundancy and weak interpretability. A second CFA was performed on the revised 30-item scale, which retained the original eight-factor structure. Each factor consisted of 2 to 9 items, with final factor loadings ranging from 0.60 to 0.95, indicating strong internal consistency and construct alignment.

The final model yielded acceptable fit indices: χ2[377] = 1116.17, p <.05), and the value for the CFI (0.90), TLI (0.89), NFI (0.86), and RMSEA (0.07). Cronbach’s alpha coefficients for the eight factors ranged from 0.73 to 0.92, indicating good internal reliability. Figure 1 presents the standardized path diagram. Table 2 provides detailed factor loadings before and after item elimination, and Table 3 shows inter-factor covariances and correlations, supporting the theoretical coherence of the CTSRS framework. Table 2 provides pre- and post-elimination item loadings, and Table 3 displays inter-factor covariances, supporting the conceptual structure and statistical validity of the CTSRS.

Path model and factor loadings of CFA

link